Time For A Change At SPSP Journals

Disclaimer: This post reflects my own opinions and is not an official statement of policy at the Society for Personality and Social Psychology. I received permission from SPSP’s Executive Director to discuss the outcomes of the recent SPSP board meeting.

Last week, I went to St. Louis to attend my final meeting as member at large for the Society for Personality and Social Psychology. Board members and elected officers use these meetings to discuss new policies and to make decisions about the society’s goals and priorities. Somewhat surprisingly, in my three years on the board, one topic that had never come up (as far as I can remember) was whether the society was doing enough to address concerns about the transparency and openness of research published in its journals. Despite the fact that many of the most salient examples from the replication crisis (or whatever we want to call it) came from within social psychology, the main society for supporting and promoting this work had made almost no changes to its journal policies over the past decade. So in my final meeting, I proposed a series of policy changes that could be implemented at SPSP journals, including Personality and Social Psychology Bulletin, Personality and Social Psychology Review (a journal that focuses primarily on reviews), and with possible support from a broader consortium of four societies, Social Psychological and Personality Science.

Before describing this proposal—and to give a bit of history—I did want to acknowledge that the society has taken a few baby steps towards reform. The history of that response is documented here, with two relevant entries describing these efforts. First, in 2013, the society convened a task force to study publication and research practices. In my view, however, the policies that emerged from this task force are pretty anemic: It appears that the only recommendations were to reaffirm the already existing (and poorly enforced) field-wide standards for data sharing and methods reporting. Notably, the report from this task force called for further review of these issues, but apparently that never happened. Later, in 2015, the society (but not its journals) did apparently vote to endorse the Transparency and Openness Guidelines. However, after doing a bit of checking, I found that the society only voted to support these guidelines “in principle” without actually being willing to become official signatories. No policies were changed as a result of this endorsement.

The society’s training committee has done an excellent job incorporating sessions into the annual meeting that address research practices in the field…The problem is that researchers are now being trained to use practices that the journals themselves don’t value or reward

I also want to note that although change in official journal policies at SPSP has been limited, there are areas within the society where some progress has been made. For instance, the society’s training committee has done an excellent job incorporating sessions into the annual meeting that address research practices in the field. Conference attendees have had numerous opportunities to learn about preregistration, replication, meta-analytic techniques, Bayesian analyses, and much more. The problem is that researchers are now being trained to use practices that the journals themselves don’t value or reward. And although the journals at least allow for some practices like preregistration and data sharing, as Simine Vazire has said, when transparently reported, pre-registered studies with open data are evaluated alongside less transparently reported research, the former will often not look good in comparison because the flaws will be more visible.

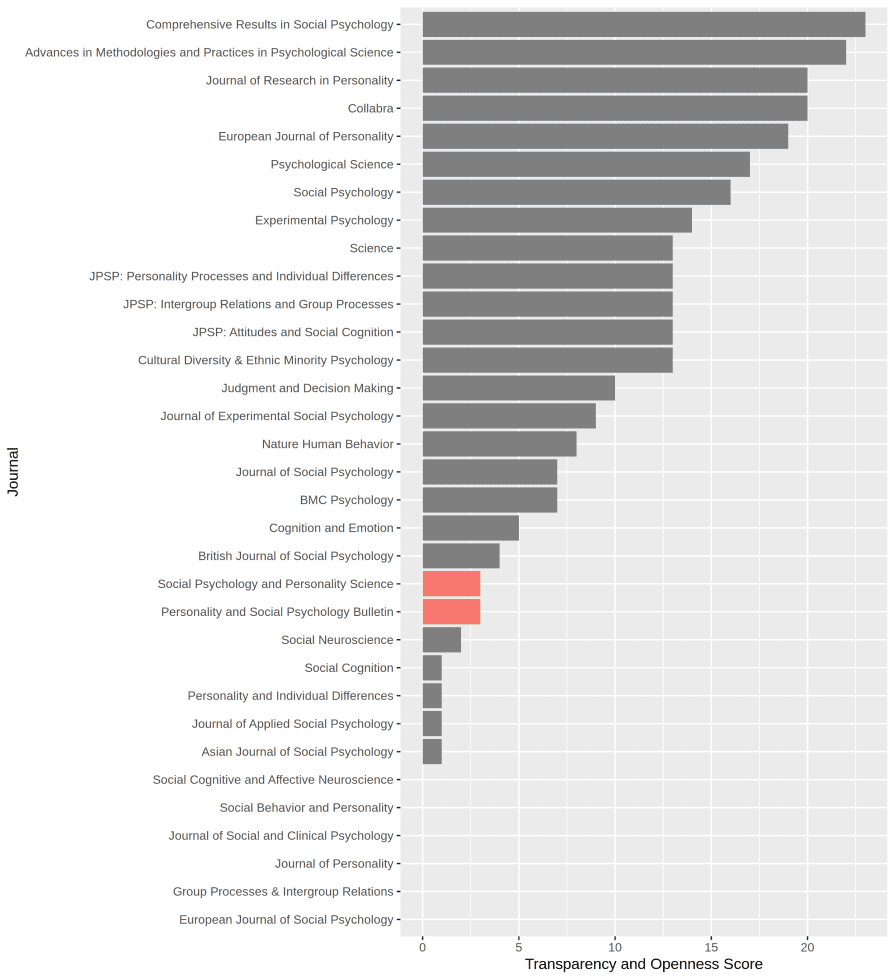

The society is now in a position where its journals have fallen far behind alternative outlets for social and personality psychology in regard to transparency, openness, and policies that foster replicability. The Center for Open Science collects data on where various social and personality psychology journals stand on such policies, and SPSP’s journals currently do not look so good in comparison. The following figure shows one possible ranking, which awards “points” for various policies regarding preregistration, data sharing, consideration of replication studies, and other open practices (for details, see here). To be sure, this is not the only way to compare these journals, and there may be policies and initiatives that are not captured in this ranking, but it would be hard to come up with an alternative set of criteria that would dramatically change this picture.

Journal Policies Toward Openness and Transparency. SPSP Journals highlighted in red. Source: Center for Open Science (https://osf.io/9ydm3/). Data as of April 29, 2019.

In response to the lack of attention that these issues have received, I proposed a series of changes to SPSP journals that I hoped the board would consider. The full report with my justifications is here, but the main proposals are:

- Have each journal become a signatory of the TOP guidelines

- Endorse Level II for each TOP guideline, including requirements for:

- Appropriate citations for materials and data

- Open data (with exceptions noted at submission)

- Open code (with exceptions noted at submission)

- Open materials (with exceptions noted at submission)

- Adherence to design and analysis transparency standards

- Statement of whether the studies were preregistered; if so, preregistration documents must be made available for review

- Statement of whether the analysis plan was preregistered; if so, preregistration documents must be made available for review

- Encouragement of replication studies, which are evaluated using results-blind review

- Adopt badges for open science practices

- Adopt the Pottery Barn Rule for replication studies

- Partner with PsyArxiv to promote preprints for articles published in SPSP journals

I suggested these particular policies specifically because each has already been implemented by at least some of the journals listed in the figure above, so we already have some ideas about how these reforms are playing out. This makes adoption of these policies less risky than it might otherwise be. In addition, I want to be clear that these are not the only policies that I’d like to see implemented at SPSP journals, but the ones that I thought might be most palatable, given my (perhaps inaccurate) view of where the society is right now regarding these issues. For instance, I would love to see SPSP journals adopt the registered report submission format, as I think that this mechanism is one of the strongest ways to address the concerns about transparency, rigor, and replicability that have been raised, and because I think that much of the research that is published at SPSP journals would be well suited to this type of submission process. I decided not to include this in my proposal because it was already very long and because I didn’t perceive a will for such a change among the leadership; but I would love to see the society and its editors move forward on such policies in the future. I also acknowledge that these policies are not the only paths towards more transparent and replicable research; they are just the ones that I thought were most feasible at this time.

I’m happy to note that reception to at least some of these proposals was reasonably positive, and the board agreed to take action on the first two of these proposals. Specifically, the board agreed to have SPSP journals become signatories of the TOP guidelines and, with some additional work from the publication committee regarding implementation, to adopt Level II for all TOP guidelines.1 These changes—especially the requirements for open data, materials, and code, along with the encouragement of replication studies—represent big and promising changes for the journals, if they are implemented.

Although I am encouraged by the progress that was made at the last meeting, I wanted to post my full proposal publicly for two reasons. First, although the board officially voted to adopt Level II of the TOP guidelines, I am slightly concerned that during the deliberations about how these policies will be implemented, those involved might decide to walk back from this initial commitment before these policies can actually be implemented. Hopefully some public acknowledgment of this initial vote can prevent that from happening. Second, I think that adopting these guidelines is just the first step towards a set of broader reforms that could greatly improve the transparency, openness, and rigor of research published at SPSP journals. I hope that others will join me in encouraging the board to consider additional steps—possibly those I suggested in my proposal, but including many others—that might help these journals (journals that were initially slow to adopt and encourage open practices) become models for the field.

The new policies would likely be implemented once new editors are appointed, which for PSPB will be in 2020.↩︎